Bringing an AI Product to Market

The Core Responsibilities of the AI Product Manager

Product Managers are responsible for the successful development, testing, release, and adoption of a product, and for leading the team that implements those milestones. Product managers for AI must satisfy these same responsibilities, tuned for the AI lifecycle. In the first two articles in this series, we suggest that AI Product Managers (AI PMs) are responsible for:

- Deciding on the core function, audience, and desired use of the AI product

- Evaluating the input data pipelines and ensuring they are maintained throughout the entire AI product lifecycle

- Orchestrating the cross functional team (Data Engineering, Research Science, Data Science, Machine Learning Engineering, and Software Engineering)

- Deciding on key interfaces and designs: user interface and experience (UI/UX) and feature engineering

- Integrating the model and server infrastructure with existing software products

- Working with ML engineers and data scientists on tech stack design and decision making

- Shipping the AI product and managing it after release

- Coordinating with the engineering, infrastructure, and site reliability teams to ensure all shipped features can be supported at scale

If you’re an AI product manager (or about to become one), that’s what you’re signing up for. In this article, we turn our attention to the process itself: how do you bring a product to market?

Identifying the problem

The first step in building an AI solution is identifying the problem you want to solve, which includes defining the metrics that will demonstrate whether you’ve succeeded. It sounds simplistic to state that AI product managers should develop and ship products that improve metrics the business cares about. Though these concepts may be simple to understand, they aren’t as easy in practice.

Agreeing on metrics

It’s often difficult for businesses without a mature data or machine learning practice to define and agree on metrics. Politics, personalities, and the tradeoff between short-term and long-term outcomes can all contribute to a lack of alignment. Many companies face a problem that’s even worse: no one knows which levers contribute to the metrics that impact business outcomes, or which metrics are important to the company (such as those reported to Wall Street by publicly-traded companies). Rachel Thomas writes about these challenges in “The problem with metrics is a big problem for AI.” There isn’t a simple fix for these problems, but for new companies, investing early in understanding the company’s metrics ecosystem will pay dividends in the future.

The worst case scenario is when a business doesn’t have any metrics. In this case, the business probably got caught up in the hype about AI, but hasn’t done any of the preparation. (Fair warning: if the business lacks metrics, it probably also lacks discipline about data infrastructure, collection, governance, and much more.) Work with senior management to design and align on appropriate metrics, and make sure that executive leadership agrees and consents to using them before starting your experiments and developing your AI products in earnest. Getting this kind of agreement is much easier said than done, particularly because a company that doesn’t have metrics may never have thought seriously about what makes their business successful. It may require intense negotiation between different divisions, each of which has its own procedures and its own political interests. As Jez Humble said in a Velocity Conference training session, “Metrics should be painful: metrics should be able to make you change what you’re doing.” Don’t expect agreement to come simply.

Lack of clarity about metrics is technical debt worth paying down. Without clarity in metrics, it’s impossible to do meaningful experimentation.

Ethics

A product manager needs to think about ethics–and encourage the product team to think about ethics–throughout the whole product development process, but it’s particularly important when you’re defining the problem. Is it a problem that should be solved? How can the solution be abused? Those are questions that every product team needs to think about.

There’s a substantial literature about ethics, data, and AI, so rather than repeat that discussion, we’ll leave you with a few resources. Ethics and Data Science is a short book that helps developers think through data problems, and includes a checklist that team members should revisit throughout the process. The Markkula Institute at the University of Santa Clara has an excellent list of resources, including an app to aid ethical decision-making. The Ethical OS also provides excellent tools for thinking through the impact of technologies. And finally–build a team that includes people of different backgrounds, and who will be affected by your products in different ways. It’s surprising (and saddening) how many ethical problems could have been avoided if more people thought about how the products would be used. AI is a powerful tool: use it for good.

Addressing the problem

Once you know which metrics are most important, and which levers affect them, you need to run experiments to be sure that the AI products you want to develop actually map to those business metrics.

Experiments allow AI PMs not only to test assumptions about the relevance and functionality of AI Products, but also to understand the effect (if any) of AI products on the business. AI PMs must ensure that experimentation occurs during three phases of the product lifecycle:

- Phase 1: Concept

During the concept phase, it’s important to determine if it’s even possible for an AI product “intervention” to move an upstream business metric. Qualitative experiments, including research surveys and sociological studies, can be very useful here.

For example, many companies use recommendation engines to boost sales. But if your product is highly specialized, customers may come to you knowing what they want, and a recommendation engine just gets in the way. Experimentation should show you how your customers use your site, and whether a recommendation engine would help the business. - Phase 2: Pre-deployment

In the pre-deployment phase, it’s essential to ensure that certain metrics thresholds are not violated by the core functionality of the AI product. These measures are commonly referred to as guardrail metrics, and they ensure that the product analytics aren’t giving decision-makers the wrong signal about what’s actually important to the business.

For example, a business metric for a rideshare company might be to reduce pickup time per user; the guardrail metric might be to maximize trips per user. An AI product could easily reduce average pickup time by dropping requests from users in hard-to-reach locations. However, that action would result in negative business outcomes for the company overall, and ultimately slow adoption of the service. If this sounds fanciful, it’s not hard to find AI systems that took inappropriate actions because they optimized a poorly thought-out metric. The guardrail metric is a check to ensure that an AI doesn’t make a “mistake.”

When a measure becomes a target, it ceases to be a good measure (Goodhart’s Law). Any metric can and will be abused. It is useful (and fun) for the development team to brainstorm creative ways to game the metrics, and think about the unintended side-effects this might have. The PM just needs to gather the team and ask “Let’s think about how to abuse the pickup time metric.” Someone will inevitably come up with “To minimize pickup time, we could just drop all the rides to or from distant locations.” Then you can think about what guardrail metrics (or other means) you can use to keep the system working appropriately. - Phase 3: Post-deployment

After deployment, the product needs to be instrumented to ensure that it continues to behave as expected, without harming other systems. Ongoing monitoring of critical metrics is yet another form of experimentation. AI performance tends to degrade over time as the environment changes. You can’t stop watching metrics just because the product has been deployed.

For example, an AI product that helps a clothing manufacturer understand which materials to buy will become stale as fashions change. If the AI product is successful, it may even cause those changes. You must detect when the model has become stale, and retrain it as necessary.

Fault Tolerant Versus Fault Intolerant AI Problems

AI product managers need to understand how sensitive their project is to error. This isn’t always simple, since it doesn’t just take into account technical risk; it also has to account for social risk and reputational damage. As we mentioned in the first article of this series, an AI application for product recommendations can make a lot of mistakes before anyone notices (ignoring concerns about bias); this has business impact, of course, but doesn’t cause life-threatening harm. On the other hand, an autonomous vehicle really can’t afford to make any mistakes; even if the autonomous vehicle is safer than a human driver, you (and your company) will take the blame for any accidents.

Planning and managing the project

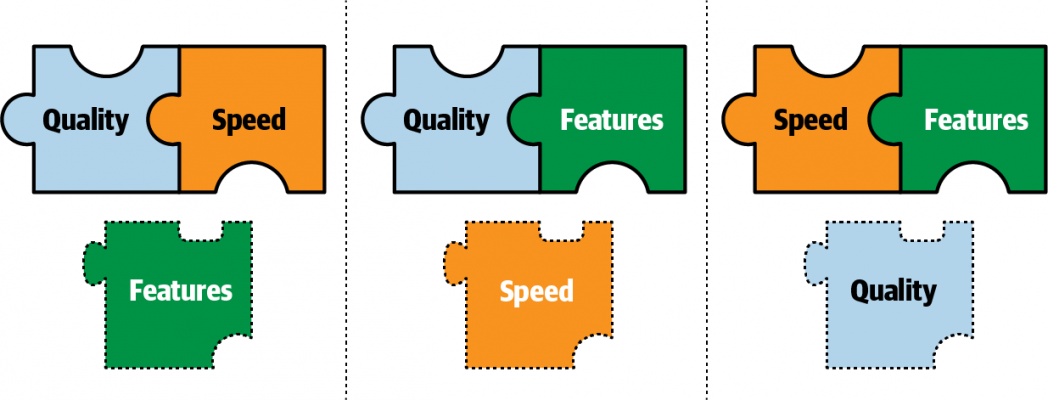

AI PMs have to make tough choices when deciding where to apply limited resources. It’s the old “choose two” rule, where the parameters are Speed, Quality, and Features. For example, for a mobile phone app that uses object detection to identify pets, speed is a requirement. A product manager may sacrifice either a more diverse set of animals, or the accuracy of detection algorithms. These decisions have dramatic implications on project length, resources, and goals.

Similarly, AI product managers often need to choose whether to prioritize the scale and impact of a product over the difficulty of product development. Years ago a health and fitness technology company realized that its content moderators, used to manually detect and remediate offensive content on its platform, were experiencing extreme fatigue and very poor mental health outcomes. Even beyond the humane considerations, moderator burnout was a serious product issue, in that the company’s platform was rapidly growing, thus exposing the average user to more potentially offensive or illegal content. The difficulty of content moderation work was exacerbated by its repetitive nature, making it a candidate for automation via AI. However, the difficulty of developing a robust content moderation system at the time was significant, and would have required years of development time and research. Ultimately, the company decided to simply drop the most social components of the platform, a decision which limited overall growth. This tradeoff between impact and development difficulty is particularly relevant for products based on deep learning: breakthroughs often lead to unique, defensible, and highly lucrative products, but investing in products with a high chance of failure is an obvious risk. Products based on deep learning can be difficult (or even impossible) to develop; it’s a classic “high return versus high risk” situation, in which it is inherently difficult to calculate return on investment.

The final major tradeoff that AI product managers must evaluate is how much time to spend during the R&D and design phases. With no restrictrictions on release dates, PMs and engineers alike would choose to spend as much time as necessary to nail the product goals. But in the real world, products need to ship, and there’s rarely enough time to do the research necessary to ship the best possible product. Therefore, product managers must make a judgment call about when to ship, and that call is usually based on incomplete experimental results. It’s a balancing act, and admittedly, one that can be very tricky: achieving the product’s goals versus getting the product out there. As with traditional software, the best way to achieve your goals is to put something out there and iterate. This is particularly true for AI products. Microsoft, LinkedIn, and Airbnb have been especially candid about their journeys towards building an experiment-driven culture and the technology required to support it. Some of the best lessons are captured in Ron Kohavi, Diane Tang, and Ya Xu’s book: Trustworthy Online Controlled Experiments : A Practical Guide to A/B Testing.

The AI Product Development Process

The development phases for an AI project map nearly 1:1 to the AI Product Pipeline we described in the second article of this series.

AI projects require a “feedback loop” in both the product development process and the AI products themselves. Because AI products are inherently research-based, experimentation and iterative development are necessary. Unlike traditional software development, in which the inputs and results are often deterministic, the AI development cycle is probabilistic. This requires several important modifications to how projects are set up and executed, regardless of the project management framework.

Understand the Customer and Objectives

Product managers must ensure that AI projects gather qualitative information about customer behavior. Because it might not be intuitive, it’s important to note that traditional data measurement tools are more effective at measuring magnitude than sentiment. For most AI products, the product manager will be less interested in the click-through rate (CTR) and other quantitative metrics than they are in the utility of the AI product to the user. Therefore, traditional product research teams must engage with the AI team to ensure that the correct intuition is applied to AI product development, as AI practitioners are likely to lack the appropriate skills and experience. CTRs are easy to measure, but if you build a system designed to optimize these kinds of metrics, you might find that the system sacrifices actual usefulness and user satisfaction. In this case, no matter how well the AI product contributes to such metrics, it’s output won’t ultimately serve the goals of the company.

It’s easy to focus on the wrong metric if you haven’t done the proper research. One mid-sized digital media company we interviewed reported that their Marketing, Advertising, Strategy, and Product teams once wanted to build an AI-driven user traffic forecast tool. The Marketing team built the first model, but because it was from marketing, the model optimized for CTR and lead conversion. The Advertising team was more interested in cost per lead (CPL) and lifetime value (LTV), while the Strategy team was aligned to corporate metrics (revenue impact and total active users). As a result, many of the tool’s users were dissatisfied, even though the AI functioned perfectly. The ultimate result was the development of multiple models that optimize for different metrics, and the redesign of the tool so that it could present those outputs clearly and intuitively to different kinds of users.

Internally, AI PMs must engage stakeholders to ensure alignment with the most important decision-makers and top-line business metrics. Put simply, no AI product will be successful if it never launches, and no AI product will launch unless the project is sponsored, funded, and connected to important business objectives.

Data Exploration and Experimentation

This phase of an AI project is laborious and time consuming, but completing it is one of the strongest indicators of future success. A product needs to balance the investment of resources against the risks of moving forward without a full understanding of the data landscape. Acquiring data is often difficult, especially in regulated industries. Once relevant data has been obtained, understanding what is valuable and what is simply noise requires statistical and scientific rigor. AI product managers probably won’t do the research themselves; their role is to guide data scientists, analysts, and domain experts towards a product-centric evaluation of the data, and to inform meaningful experiment design. The goal is to have a measurable signal for what data exists, solid insights into that data’s relevance, and a clear vision of where to concentrate efforts in designing features.

Data Wrangling and Feature Engineering

Data wrangling and feature engineering is the most difficult and important phase of every AI project. It’s generally accepted that, during a typical product development cycle, 80% of a data scientist’s time is spent in feature engineering. Trends and tools in AutoML and Deep Learning have certainly reduced the time, skills, and effort required to build a prototype, if not an actual product. Nonetheless, building a superior feature pipeline or model architecture will always be worthwhile. AI product managers should make sure project plans account for the time, effort, and people needed.

Modeling and Evaluation

The modeling phase of an AI project is frustrating and difficult to predict. The process is inherently iterative, and some AI projects fail (for good reason) at this point. It’s easy to understand what makes this step difficult: there is rarely a sense of steady progress towards a goal. You experiment until something works; that might happen on the first day, or the hundredth day. An AI product manager must motivate the team members and stakeholders when there is no tangible “product” to show for everyone’s labor and investment. One strategy for maintaining motivation is to push for short-term bursts to beat a performance baseline. Another would be to start multiple threads (possibly even multiple projects), so that some will be able to demonstrate progress.

Deployment

Unlike traditional software engineering projects, AI product managers must be heavily involved in the build process. Engineering managers are usually responsible for making sure all the components of a software product are properly compiled into binaries, and for organizing build scripts meticulously by version to ensure reproducibility. Many mature DevOps processes and tools, honed over years of successful software product releases, make these processes more manageable, but they were developed for traditional software products. The equivalent tools and processes simply do not exist in the ML/AI ecosystem; when they do, they are rarely mature enough to use at scale. As a result, AI PMs must take a high-touch, customized approach to guiding AI products through production, deployment, and release.

Monitoring

Like any other production software system, after an AI product is live it must be monitored. However, for an AI product, both model performance and application performance must be monitored simultaneously. Alerts that are triggered when the AI product performs out of specification may need to be routed differently; the in-place SRE team may not be able to diagnose technical issues with the model or data pipelines without support from the AI team.

Though it’s difficult to create the “perfect” project plan for monitoring, it’s important for AI PMs to ensure that project resources (especially engineering talent) aren’t immediately released when the product has been deployed. Unlike a traditional software product, it’s hard to define when an AI product has been deployed successfully. The development process is iterative, and it’s not over after the product has been deployed–though, post-deployment, the stakes are higher, and your options for dealing with issues are more limited. Therefore, members of the development team must remain on the maintenance team to ensure that there is proper instrumentation for logging and monitoring the product’s health, and to ensure that there are resources available to deal with the inevitable problems that show up after deployment. (We call this “debugging” to distinguish it from the evaluation and testing that takes place during product development. The final article in this series will be devoted to debugging.)

Among operations engineers, the idea of observability is gradually replacing monitoring. Monitoring requires you to predict the metrics you need to watch in advance. That ability is certainly important for AI products–we’ve talked all along about the importance of metrics. Observability is critically different. Observability is the ability to get the information you need to understand why the system behaved the way it does; it’s less about measuring known quantities, and more about the ability to diagnose “unknown unknowns.”

Executing on an AI Product Roadmap

We’ve spent a lot of time talking about planning. Now let’s shift gears and discuss what’s needed to build a product. After all, that’s the point.

AI Product Interface Design

The AI product manager must be a member of the design team from the start, ensuring that the product provides the desired outcomes. It’s important to account for the ways a product will be used. In the best AI products, users can’t tell how the underlying models impact their experience. They neither know or care that there is AI in the application. Take Stitch Fix, which uses a multitude of algorithmic approaches to provide customized style recommendations. When a Stitch Fix user interacts with its AI products, they interface with the prediction and recommendation engines. The information they interact with during that experience is an AI product–but they neither know, nor care, that AI is behind everything they see. If the algorithm makes a perfect prediction, but the user can’t imagine wearing the items they’re shown, the product is still a failure. In reality, ML models are far from perfect, so it is even more imperative to nail the user experience.

To do so, product managers must ensure that design gets an equal seat at the table with engineering. Designers are more attuned to qualitative research about user behavior. What signals show user satisfaction? How do you build products that delight users? Apple’s sense of design, making things that “just work,” pioneered through the iPod, iPhone, and iPad products is the foundation of their business. That’s what you need, and you need that input from the beginning. Interface design isn’t an after-the-fact add-on.

Picking the Right Scope

“Creeping featurism” is a problem with any software product, but it’s a particularly dangerous problem for AI. Focus your product development effort on problems that are relevant to the business and consumer. A successful AI product measurably (and positively) impacts metrics that matter to the business. Therefore, limit the scope of an AI product to features that can create this impact.

To do so, start with a well-framed hypothesis that, upon validation through experimentation, will produce meaningful outcomes. Doing this effectively means that AI PMs must learn to translate business intuitions into product development tools and processes. For example, if the business seeks to understand more about its customer base in order to maximize lifetime value for a subscription product, an AI PM would do well to understand the tools available for customer and product-mix segmentation, recommendation engines, and time-series forecasting. Then, when it comes to developing the AI product roadmap, the AI PM can focus engineering and AI teams on the right experiments, the correct outcomes,andthe smoothest path to production.

It is tempting to over-value the performance gains achieved through the use of more complex modeling techniques, leading to the dreaded “black box” problem: models for which it’s difficult (if not impossible) to understand the relationship between the input and the output. Black box models are seldom useful in business environments for several reasons. First, being able to explain how the model works is often a prerequisite for executive approval. Ethical and regulatory considerations often require a detailed understanding of the data, derived features, pipelines and scoring mechanisms involved in the AI system. Solving problems with the simplest model possible is always preferable, and not just because it leads to models that are interpretable. In addition, simpler modeling approaches are more likely to be supported by a wide variety of frameworks, data platforms, and languages, increasing interoperability and decreasing technical debt.

Another scoping consideration concerns the processing engine that will power the product. Problems that are real-time (or near real-time) in nature can only be addressed by highly performant stream processing architectures. Examples of this include product recommendations in e-commerce systems or AI-enabled messaging. Stream processing requires significant engineering effort, and it’s important to account for that effort at the beginning of development. Some machine learning approaches (and many software engineering practices) are simply not appropriate for near-real time applications. If the problem at hand is more flexible and less interactive (such as offline churn probability prediction), batch processing is probably a good approach, and is typically easier to integrate with the average data stack.

Prototypes and Data Product MVPs

Entrepreneurial product managers are often associated with the phrase “Move Fast and Break Things.” AI product mangers live and die by “Experiment Fast So You Don’t Break Things Later.” Take any social media company that sells advertisements. The timing, quantity, and type of ads displayed to segments of a company’s user population are overwhelmingly determined by algorithms. Customers contract with the social media company for a certain fixed budget, expecting to achieve certain audience exposure thresholds that can be measured by relevant business metrics. The budget that is actually spent successfully is referred to as fulfillment, and is directly related to the revenue that each customer generates. Any change to the underlying models or data ecosystem, such as how certain demographic features are weighted, can have a dramatic impact on the social media company’s revenue. Experimenting with new models is essential–but so is yanking an underperforming model out of production. This is only one example of why rapid prototyping is important for teams building AI products. AI PMs must create an environment in which continuous experimentation and failure are allowed (even celebrated), along with supporting the processes and tools that enable experimentation and learning through failure.

In a previous section, we introduced the importance of user research and interface design. Qualitative data collection tools (such as SurveyMonkey, Qualtrics, and Google Forms) should be joined with interface prototyping tools (such as Invision and Balsamiq), and with data prototyping tools (such as Jupyter Notebooks) to form an ecosystem for product development and testing.

Once such an environment exists, it’s important for the product manager to codify what constitutes a “minimum viable” AI product (MVP). This product should be robust enough to be used for user research and quantitative (model evaluation) experimentation, but simple enough that it can be quickly discarded or adjusted in favor of new iterations. And, while the word “minimum” is important, don’t forget “viable.” An MVP needs to be a product that can stand on its own, something that customers will want and use. If the product isn’t “viable” (i.e., if a user wouldn’t want it) you won’t be able to conduct good user research. Again, it’s important to listen to data scientists, data engineers, software developers, and design team members when deciding on the MVP.

Data Quality and Standardization

In most organizations, Data Quality is either an engineering or IT problem; it is rarely addressed by the product team until it blocks a downstream process or project. This relationship is impossible for teams developing AI products. “Garbage in, garbage out” holds true for AI, so good AI PMs must concern themselves with data health.

There are many excellent resources on data quality and data governance. The specifics are outside the scope of this article, but here are some core principles that should be incorporated into any product manager’s toolkit:

- Beware of “data cleaning” approaches that damage your data. It’s not data cleaning if it changes the core properties of the underlying data.

- Look for peculiarities in your data (for example, data from legacy systems that truncate text fields to save space).

- Understand the risks of bad downstream standardization when planning and implementing data collection (e.g. arbitrary stemming, stop word removal.).

- Ensure data stores, key pipelines, and queries are properly documented, with structured metadata and a well-understood data flow.

- Consider how time impacts your data assets, as well as seasonal effects and other biases.

- Understand that data bias and artifacts can be introduced by UX choices and survey design.

Augmenting AI Product Management with Technical Leadership

There is no intuitive way to predict what will work best in AI product development. AI PMs can build amazing things, but this often comes largely from the right frameworks rather than the correct tactical actions. Many new tech capabilities have the potential to enable software engineering using ML/AI techniques more quickly and accurately. AI PMs will need to leverage new and emerging AI techniques (image upscaling, synthetic text generation using adversarial networks, reinforcement learning, and more), and partner with expert technologists to put these tools to use.

It’s unlikely that every AI PM will have world-class technical intuition in addition to excellent product sense, UI/X experience, customer knowledge, leadership skills, and so on. But don’t let that breed pessimism. Since one person can’t be an expert at everything, AI PMs need to form a partnership with a technology leader (e.g., a Technical Lead or Lead Scientist) who knows the state of the art and is familiar with current research, and trust that tech leader’s educated intuition.

Finding this critical technical partner can be difficult, especially in today’s competitive talent market. However, all is not lost: there are many excellent technical product leaders out there masquerading as competent engineering managers.

Product manager Matt Brandwein suggests observing what potential tech leads do in their idle time, and taking note of which domains they find attractive. Someone’s current role often doesn’t reveal where their interests and talent lie. Most importantly, the AI PM should look for a tech lead who can mitigate their own weaknesses. For example, if the AI PM is a visionary, picking a technical lead with operational experience is a good idea.

Testing ML/AI Products

When a product is ready to ship, the PM will work with user research and engineering teams to develop a release plan that collects both qualitative and quantitative user feedback. The bulk of this data will be concentrated on user interaction with the user interface and front end of the product. AI PMs must also plan to collect data about the “hidden” functionality of the AI product, the part no user ever sees directly: model performance. We’ve discussed the need for proper instrumentation at both the model and business levels to gauge the product’s effectiveness; this is where all of that planning and hard work pays off!

On the model side, performance metrics that were validated during development (predictive power, model fit, precision) must be constantly re-evaluated as the model is exposed to more and more unseen data. A/B testing, which is frequently used in web-based software development, is useful for evaluating model performance in production. Most companies already have a framework for A/B testing in their release process, but some may need to invest in testing infrastructure. Such investments are well worth it.

It’s inevitable that the model will require adjustments over time, so AI PMs must ensure that whoever is responsible for the product post-launch has access to the development team in order to investigate and resolve issues. Here, A/B testing has another benefit: the ability to run champion/challenger model evaluations. This framework allows for a deployed model to run uninterrupted, while a second model is evaluated against a sample of the total population. If the second model outperforms the original, it can simply be swapped out-often without any downtime!

Overall, AI PMs should remain closely involved in the early release lifecycle for AI products, taking responsibility for coordinating and managing A/B tests and user data collection, and resolving issues with the product’s functionality.

Conclusion

In this article, we’ve focused primarily on the AI product development process, and mapping the AI product manager’s responsibilities to each stage of that process. As with many other digital product development cycles, AI PMs must first ensure that the problem to be solved is both a problem that ML/AI can solve and a problem that is vital to the business. Once this criteria has been met, the AI PM must consider whether the product should be developed, considering the myriad of technical and ethical considerations at play when developing and releasing a production AI system.

We propose the AI Product Development Process as a blueprint for AI PMs of all industries, who may develop myriad different AI products. Though this process is by no means exhaustive, it emphasizes the kind of critical thinking and cross-departmental collaboration necessary to success at each stage of the AI product lifecycle. However, regardless of the process you use, experimentation is the key to success. We’ve said that repeatedly, and we aren’t tired: the more experiments you can do, the more likely you are to build a product that works (i.e., positively impacts metrics the company cares about). And don’t forget qualitative metrics that help you understand user behavior!

Once an AI system is released and in use, however, the AI PM has a somewhat unique role in product maintenance. Unlike PMs for many other software products, AI PMs must ensure that robust testing frameworks are built and utilized not only during the development process, but also in post-production. Our next article focuses on perhaps the most important phase of the AI product lifecycle: maintenance and debugging.

from Radar https://ift.tt/32Xlq9c

No comments: